Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

AI-Driven Web-Based System for Accurate Brain Tumor Detection, Staging, and Visualization Using CNNs and MRI Scans

Authors: Aniket Khandave, Gunjan Kukreja, Amol Patil, Rutuja Sonwane, Komal Patankar, Aditya Chorghade

DOI Link: https://doi.org/10.22214/ijraset.2024.65491

Certificate: View Certificate

Abstract

Brain tumors can be attributed as severe problems in the current system of health because of their life endangering complications and equally challenge some diagnostic methods. This work describes a web-based system using deep learning labeled convolutional neural networks for brain tumor detection and staging as well as for image visualization. Scans like MRI scans are uploaded through users control and the system reads these and gives details like tumor size, tumor shape and even tumor stage. High diagnostic accuracy is achieved due to the development of CNN architectures and segmentation methods, tumor characteristics are visualized in the form that is convenient for clinical decision-making. To prevent the system from undergoing a limited learning process and produce highly accurate performance for different patients and tumor types, the system utilizes multiple MRI dataset that include various types of tumors and their stages. Through disease diagnosis, the solution attains a classification accuracy of 98.82%, combining artificial intelligence in medical image analysis with actual care implementation solution, standardized, fast, and efficient. By decreasing radiologists’ load and improving patient care, this system evidences the values of applying AI in diagnostics.

Introduction

I. INTRODUCTION

Both primary and metastatic brain tumors remain clinically indicated as some of the most challenges in the field of medicine today because of complicated natures and severe effects on the health of patients. Benign tumors, despite being non-cancerous, can grow and compress certain parts of the brain leading to complications such as chronic headaches, seizures and cognitive problems. It is crucial to diagnose more accurately and punctually; nevertheless, ordinary diagnostic approaches may be problematic. The diversity of outline, size, the position of growing mass; Shape and size of a tumor and its localization, make detection difficult as well as MRI where the image of a tumor looks very much like the image of the healthy brain tissue. Furthermore, confirmatory diagnostic procedures such as biopsy are invasive and bear a measure of risk with them.

The very recent developments in the domain of Artificial Intelligence (AI) especially Convolutional Neural Networks (CNN) have brought significant changes in analysis of medical images. CNNs which have a strong ability in recognizing complex features from large amount of data show great ability to diagnose brain tumors from MRI images. With machine learning approaches applied for tumor identification and categorization, intervention with invasive methods can be minimized, the accuracy of the diagnosis improved, and the work of clinicians accelerated.

This work introduces a web-based AI system where in addition to identifying brain tumour in a patient, it offers further description of the tumour such as size, shape and stage from the MRI scans. This all-encompassing system employs enhanced categorisation and differentiation approaches to provide clear and easily understandable outcomes. It is trained with varying datasets and thus provides reliable means for an early diagnosis and decision making at the clinical level. This system intends to close the existing gap between the latest developments in AI and the requirements of the healthcare sector in order to reduce diagnostic mistakes, Astr tersebut, overload radiologists and enhance patients’ experiences.

II. LITERATURE SURVEY

The diagnosis and prognosis of brain tumours using artificial intelligence has been researched on in recent years. First studies by Menze et al. in 2015 comprised in establishing the BraTS dataset, a multimodal magnetic resonance imaging dataset aimed at brain tumor segmentation.

This dataset was then used as a benchmark for the segmentation models, which used both conventional and deep learning techniques. Despite it being the initial step into the more complex prospects of future developments, it was solely based on a method of segmentation, did not elaborate the aspect of tumor classification and definitely did not consider real difficulties of applications. At the same time, Ranneberger et al., (2015) proposed the U-Net architecture that became a trendsetter in the biomedical image segmentation due to the encoder decoder structure. U-Net was shown to perform very well on medical imagery, and notably on detecting shapes that are not well captured by conventional rectangular descriptions such as tumors however, it relies heavily on annotated data which is still difficult to obtain in most contexts.

Further development went to more of categorization-based systems. Rehman et al. (2020) developed a CNN-based model to classify brain tumors into binary categories: tumor or non-tumor. Though the model of the diagnosis obtained high accuracy of 94.34%, its applicability can be questionable due to the use of the limited set of clinical cases. To meet the requirement of multiclass classification, Pei et al (2021) developed a multi-scale CNN that successfully classify gliomas, meningiomas, and pituitary tumors with a classification accuracy of 93.87%. Nevertheless, this system was only based on classification without any possibility for the extra information like the staging of the tumor or even different views which are very important in decision-making processes.

The efficiency of the methods and their dependence on data have also been improved using transfer learning methods. Kamran et al. (2021) used convolutional neural networks for the tumor classification, and for this the authors proposed the transfer learning models, including ResNet-50 as well as VGG16. Although these enhancements were obtained, the system did not possess a method to offer interpretability in the results which is crucial for acceptance in healthcare. Hossain et al. (2022) extended the application to CNN-RNN model to detect the tumor and to monitor the evolution of the tumor using MRI scans. Although this greatly advanced temporal analysis, the model had a costly computation process hence less suitable for temporal analysis in real-time.

Other approaches have also been studied on the role of visualization in helping clinicians gather more information about tumors. Gupta et al. (2022) also proposed an AI-driven framework that can compare and reconstruct 3D visualization maps of tumor areas, and which can help analyze tumor margins, extent, and position. Such systems have been found to have possibilities for improved surgical planning and better interpretability. But they need special hardware for real time rendering which restricts them to normal clinics mostly.

Based on these developments, the present work adds a web-based system of brain tumor diagnosis and visualization into practical application of intelligent techniques in healthcare field. The proposed system poses tumor detection, classification, segmentation and visualization on a common platform. Data can be uploaded from MRI scans and in addition to detecting the presence of a tumour, the system also offers more information on its size, form and phase. Using the latest trends in CNN architectures, the system guarantees high classification accuracy while having an interpretability module which may aid clinicians in making their decision. In contrast to previous systems, the current approach suggests an accessible architecture that can be readily integrated into clinical working environments. Thus, the proposed system considers essential issues in detection and visualization of brain tumors and provides a fourfold advance in terms of diagnostic accuracy, time, and interpretability.

III. METHODOLOGY

A. Data Collection and Preprocessing

Comprehensive Dataset Overview for Brain Tumor Classification: -

The given dataset for this project obtained from Kaggle website, is specifically designed for the purpose of brain tumor classification from MRI. Even though the raw size of the dataset is a mere 17 MB, it is fundamental to the training and testing of deep learning model and especially those types of models most appropriate for image classification, such as CNNs.

1) Initial Dataset Structure:

The dataset that is in the pipeline, before any preprocessing or augmentation, exceeds 200 MRI images. In its current form, these images disposed into two different classes to aid the model make a distinction between normal and abnormal brain conditions:

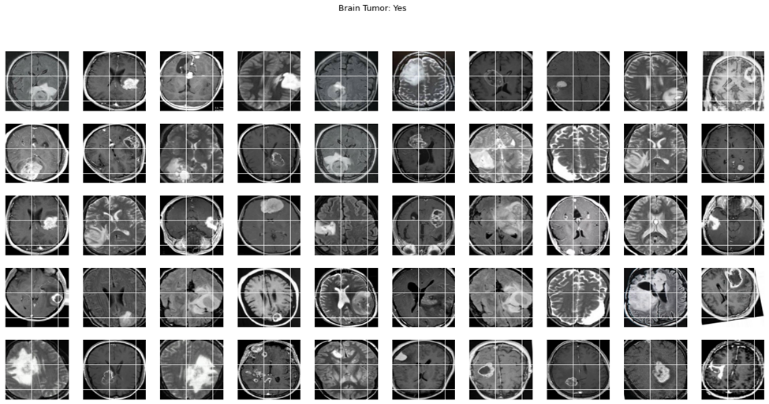

a) Yes (Tumor-positive images):

MRI scans are included in this category if a brain tumor can be detected. Each tumour has distinct size, pattern or position within the brain, making it difficult for the model to consider each case due to the variability.

Fig 1. Dataset visualization of images labeled as 'Yes' for brain tumor presence.

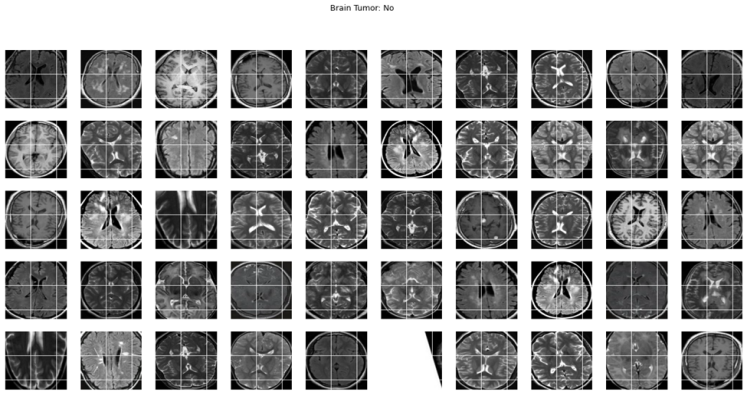

b) No (Tumor-negative images):

These scans are from the instances where no tumor is present which gives the model indication of what a normal brain should look like.

Fig 2. Dataset visualization of images labeled as 'No' for brain tumor absence.

he only challenge that can be derived out of this particular approach is that there are very limited images available for training which is more than 200 images, however, this is very limited in DL based architectures because the strength of these architectures usually depends on the number of samples available. However, through categories transformation and data enhancement approaches, we convert this shortcoming.

2) Challenges of MRI Scan Data:

The specificities of MRI data are critical, particularly within the medical imaging domain, as we do in this manuscript. In contrast to normal lightweight photographs, MRI scans can be:

- Noisy: MRI systems may produce noise which can compromise the much-needed fine details of the specimens especially tumors within the brain.

- Low Contrast: Differences in intensity or contrast in tumors are small or negligible compared to the healthy tissues in a patient’s body. This low contrast makes even detection of tumours by doctors and surgeons impossible most of the time without the help of imaging or enhancing agents.

- Varied Tumor Appearance: Tumors themselves may manifest differently on MRI exams depending on their origin (gliomas or meningiomas or pituitary adenomas and others), their location or the how the imaging was performed with different machines or at different settings or using different MRI technique.

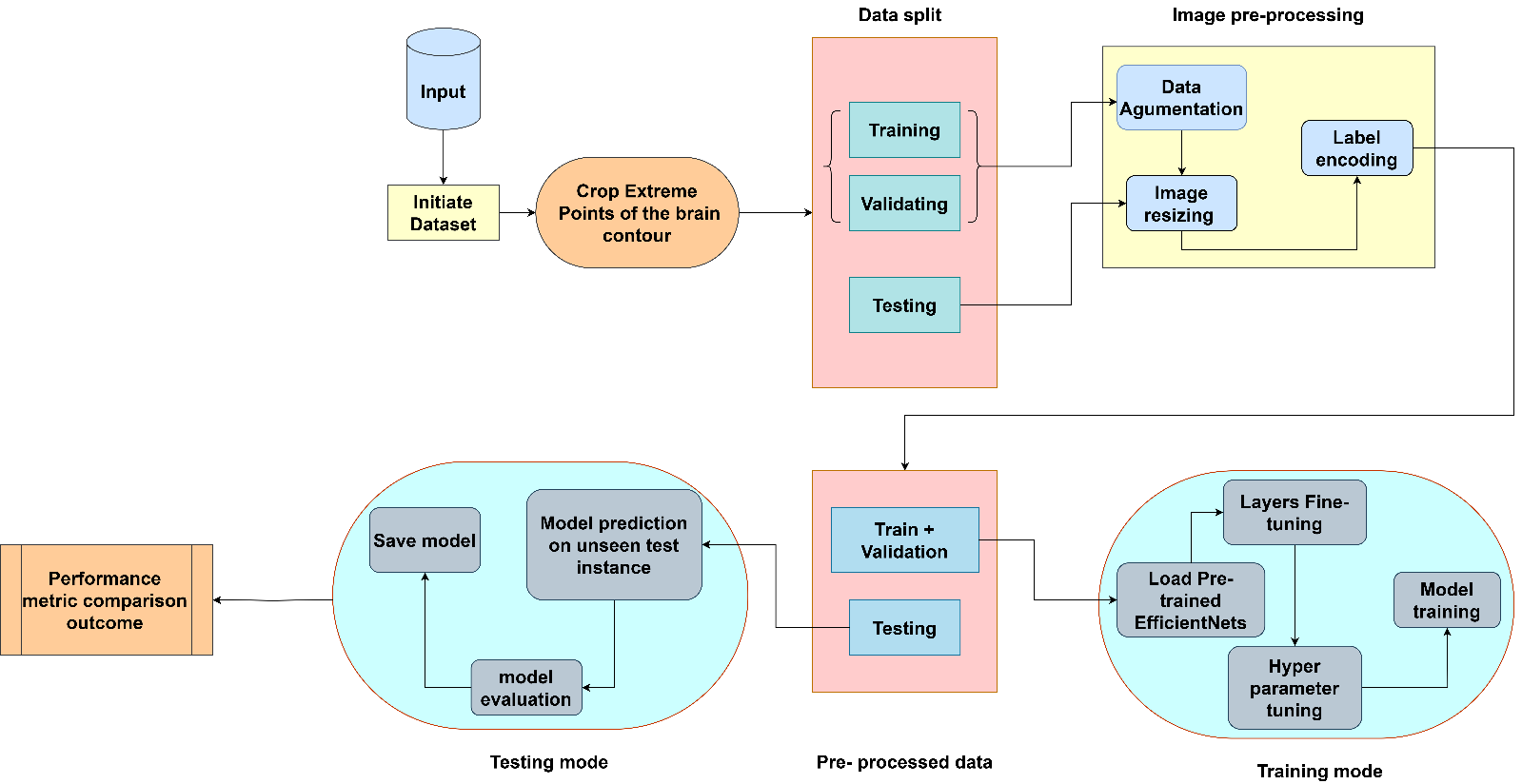

Fig 3. Architecture of the Proposed System

This diagram outlines the entire process of building a Deep Learning Model, preparing and training it, validating, and testing of the model and is usually used for classification tasks such as identifying brain tumours with the help of medical images such as MRI scans. It is the way that is established for constructing the CNN model and optimizing it in the stages such as data input, model architecture design and selection, training and optimizing the selected model, and compare the selected model to other models. Below is a more detailed, step-by-step explanation:

Several of the major procedures in creating an accurate and reliable brain tumor classification model include: Our case includes acquiring a proper set with all the forms of brain tumors. MRI scans for instance are among these medial images that are very complex and need very precautions in order the model to pick the right features. The labeled MRI scans of all the kinds of the brain tumors will be the set of images which can be downloaded from different public domains or from different medical institution. Nevertheless, mere MRI images are often not immediately enough to fed directly into a deep learning model. Mainly they differ in terms of size, quality or format and they possess noise or other undesirable data which are used to mislead the model at the time of training it. These preprocessing steps would normalize and enhance the data in a way that leads the data into training onto CNN, this means that it will position the model to capture on the right characteristics of MRI scans that define the tumours.

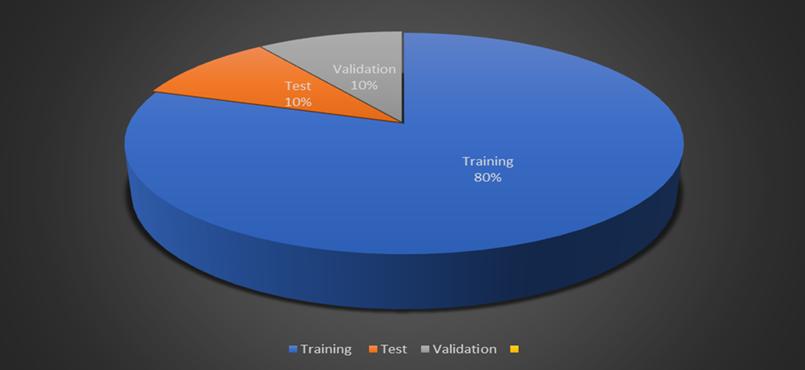

B. Splitting of dataset

The figure is a pie chart which represents the position of data in a machine learning context which is mostly used in the training process of a model. Before proceeding it is important to gives an insight of the make-up of this component, which includes:

Fig 4. Dataset splitting visualization.

- Training Data (80%): If we intend to divide our data into training, validation and test set, then it will be found that the training set is larger than any other set. In this stage, the feature that is being learn by the model include identifying relevant features to be measured from MRI images, during the classification of brain tumors. The information gathered in the training course is utilized directly in order to change the weights of the neural network through cycles.

- Validation Data (10%): Whereas, the validation set is used during training in order to monitor the model’s performance over unseen area. In this way it allows the adjustment of the learning’s parameters for instance the rate of learning of the model, the batch size among others with an aim of preventing over fitting. Overfitting is a scenario where an algorithm will give very high estimates when tested on the training data set, but will give very low estimates on a different data set.

- Testing Data (10%): The size of the testing set is used solely in the final declaration of degrees of the perfected trained model. This is independent set of data which is used on the model after the training of model and the validation of model is also done by this data set only. This is important in order to assess whether the results which we get are valid and hence this helps in increasing the models capability of the future new data that it has not encountered before.

C. Image Pre-processing

To ensure the dataset is suitable for model training, multiple preprocessing steps are employed, transforming the images and enhancing their quality:

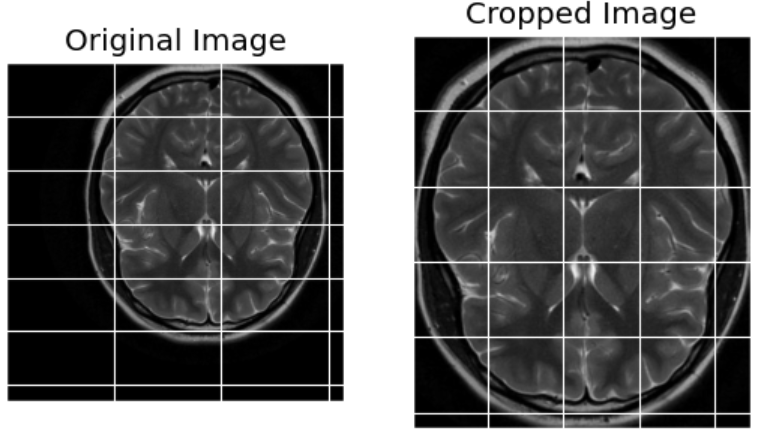

1) Cropping

This step is done with the areas of interest being the brain only from which parts of the MRI scans that have been taken are selected while eliminating superfluous elements commonly being the skull or background. A cropping process is applied and accurately identify the outer boundary of the brain, in order to confine the area of interest in which the tumors can be identified.

Fig 5. Image After Cropping to Focus on the Region of Interest

2) Resizing

Since MRI images may differ in size, it is mandatory for the images to have standard dimensions. Related to this project, every MRI scan is resized to predetermined size (s) (e.g. 224×224 pixels). This is crucial because the deep learning models, including the CNN to which the images will be fed as input, need to take in data of similar sizes.

3) Normalization

It is also noticed that pixel values of MRI scan may exhibit relatively large range that may hinder the learning process of the proposed model. Standardization of the pixel values is important especially for images because it brings them to the same scale reduces complexity of the learning process and lessens the possibility of the model to learn patterns in extreme pixel values of some images.

4) Label Encoding

The global label set which ranges from 0 to 1 is then discretised to the discrete label set including ‘Yes’ and ‘No’ which will be used later when training the neural network. It can be used singly and in combination as well although it is most commonly used in the binary form, whereby 0 is equal to “No and 1 is equal to “Yes”.

D. Data Augmentation

The downsize problem is that the overall density of potential data is lower and the augmented part contains more relevance. Augmentation works on this aspect by artificially inflating the sizes of the datasets through applying transformation on the images rhopa. This lea/ds to the creation of a stronger model, for error recognition would not merely locate tumors with a certain angle or from a particular view.

Key augmentation techniques include:

- Rotation: The ability to rotate MRI images in any random orientation allows the model to detect tumors independently of the angle of the scan. This resembles real life variability whereby, while taking a picture, one can take it from a different angle has the case in MRI scanning.

- Flipping: The arrangement in this manner sets mirror images of the original scans, ups the sample data, and decreases the chances of the model learning tumors from only one angle.

- Contrast Adjustments: Germinating the contrast levels of images helps to assist the model in decentralizing tumors from healthy tissues which may be especially hard in low contrast regions where tumors may seem like part of the brain’s tissues.

- Zooming and Cropping: The model is presented with tumors of varying sizes because through magnification of the MRI scans or using frames where different portions of the image appear more enlarged, we are thus able to demonstrate tumors at different levels of the scan.

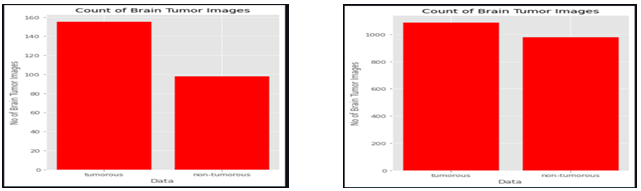

E. Dataset After Augmentation:

The augmentation and preprocessing of the data results in doubling the number of images in the datasets from 200+ to 200+. This is important because, as has been seen, the CNN ought to generalize and this is possible given that the current set of data should be exemplified by the expanded dataset. The augmented set now encodes an immensely rich set of tumor geometries and positions in conjunction with the geometries and positions of the images, brightness, contrast and viewing angle for “Yes” and “No”.

Fig 6. images before data augmentation 200+ Fig 7. images after data augmentation 2000+

F. Class Distribution

Instead, as it has been mentioned before, all those proportions given as ‘Yes/’No’ are the same after augmentation, so the model responds equally to the presence or absence of a tumor. Imbalance of classes is always a problem when dealing with the medical dataset when some classes contain significantly fewer samples, for instance, tumor-positive images are much fewer than tumor-negative images. However, augmentation helps in this regard by making the availability of more samples in both categories that makes the disbursement of the dataset much more balanced and also reduces much influence from the one class.

1) Image Resizing

Different MRI can be of different size and different resolution while the input to CNN models must be images of the same size. All image inputs are normalized or pre-resized to a size accessible by the network, for example, 224*224 or 256*256 in most cases.

2) Label Encoding

The tumor types include glioma, meningioma, and pituitary tumors and are converted to numeric form using label encoding. Because CNN models employ numerical data for learning, tumors labels have to be encoded, for example, as 0 for glioma, 1 for meningioma, and 2 for pituitary tumor.

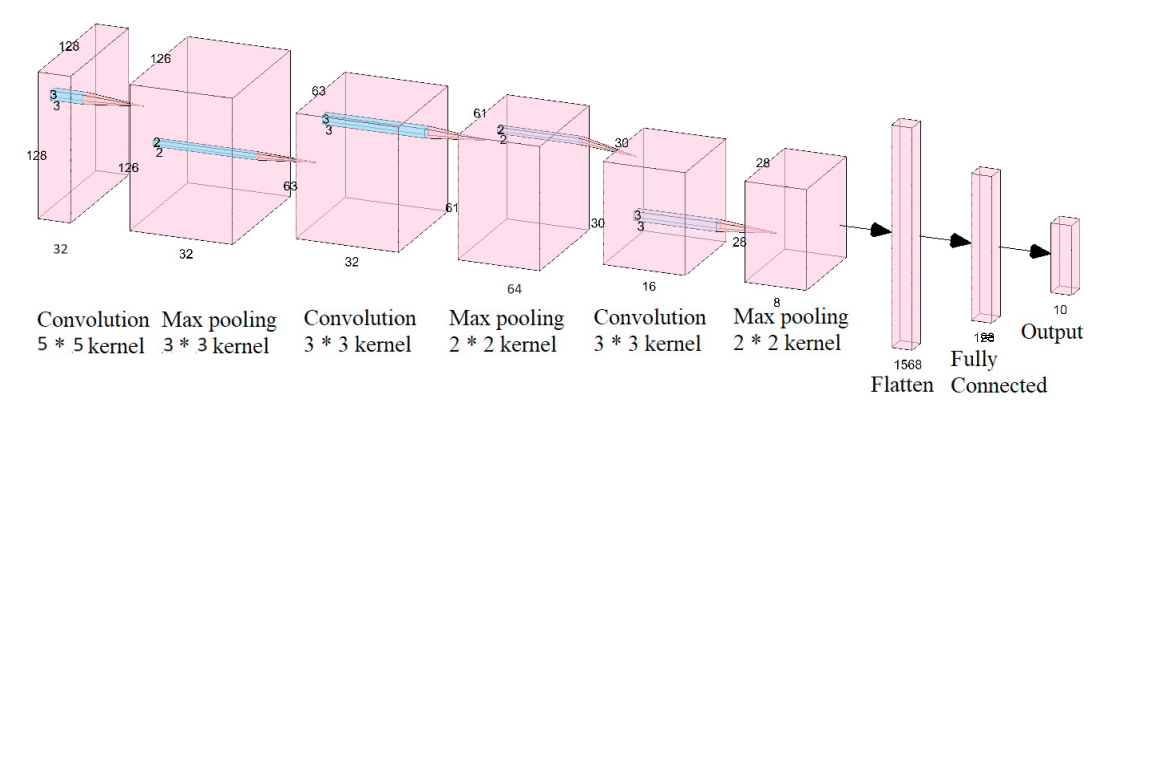

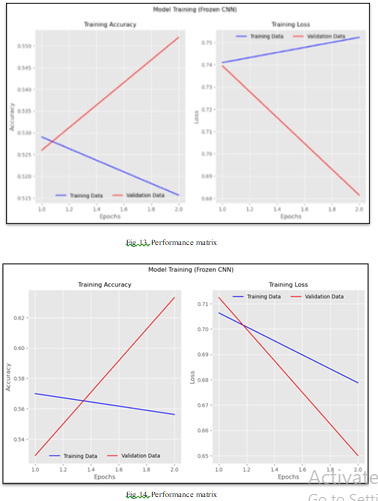

G. Model Selection and Training

CNN refers to Convolutional Neural Network, which is a very complex form of deep learning structure well suited to extracting intricate patterns and features from visual data, especially images, within hierarchical layers of convolutions and pooling operations. They consist of layers that learn to extract features from input images through convolutional operations. These features are successively down sampled through pooling layers in order to preserve important information, on these features the fully connected layers operate for the classification or regression. They are trained with algorithms which set out to minimize over the pre-defined loss function in order to enable it to classify images, produce correct results as well as make other operations in image processing. For detection of tomato leaf diseases, a CNN architecture has been utilized. As mentioned, this model involves multi-class classification and it involves multiple layers of feature extraction on the individual input images themselves.

Fig 8. Convolutional Neural Network (CNN) Architecture

Fig 8. Convolutional Neural Network (CNN) Architecture

This phase involves the construction of the CNN model that is used to train for brain tumor classification.

1) Load Pre-trained EfficientNets

CNN named EfficientNet has been proved to serve high performance and low computational complexity. Transfer learning is used rather than training a model from scratch meaning that a pre-trained EfficientNet is loaded. Transfer learning reuses the achievements of deep learning by utilizing a particular type of starter model designed previously for a large set of images (for example ImageNet) and adapting it to distinguish brain tumors.

2) Layers Fine-Tuning

The pre-training knowledge of the layers is exploited to transfer learn the EfficientNet model to the idiosyncrasies of the brain tumor dataset. As for the fine-tuning, it permits the model to modify the weights of its initial layers more correctly to see the peculiarities of the brain MRI scans, as they could be different from pictures from other domains not connected with medicine.

3) Hyperparameter Tuning

Learning rate, the number of batches and epochs on which the training is performed are also other hyper parameters. This makes sure that the model does not over simplify or overs complicate, which would amount to underfitting or overfitting respectively.

4) Model Training

Updates of parameters in the Conv & Neural Network model include the backpropagation information and the gradient descent protocol. The weights and bias in model are changed again and again until the error between the model prediction and actual labels is minimized. Training, however, continues until the model is a good fit of the cross-validation set.

H. Testing Mode

After that the model goes to the testing period with the purpose of confirming its efficiency and usefulness.

1) Train-Validation

During the full training stage, it is checked quite often that it fares good enough on any new data set. A cross-validation is helpful to overcome the above con of overfitting and to ensure that the model would if further developed would be capable of generalizing.

2) Testing on Pre-processed Data

The trained and validated model is then deduced to segregate the pre-processed MRI image of the test set. That is why, these images were not shown during training, so this gives an indication of how good the model would generalize during new data.

3) Model Evaluation

The model’s performance is evaluated based on several metrics:

- Accuracy: One is the classifier accuracy as determined from the given percentage as input.

- Precision: The proportion of the cases that it actually turned out was malignant among all the cases that the model flagged as being malignant.

- Recall: Actual positive cases found by the model as a proportion of all actual positive cases.

- F1-Score: An average of the precision and recall formula in order to get an average measure of the model’s accuracy.

4) Model Prediction on Unseen Test Instances

It is then applied to make prediction on completely unknown and unpreserved MRI scans. This stage ensures that the model can perform calculations on actual data, analog; data on which it has never been subjected to during its lifetime.

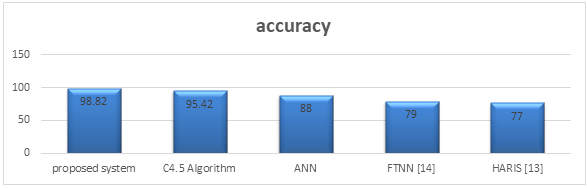

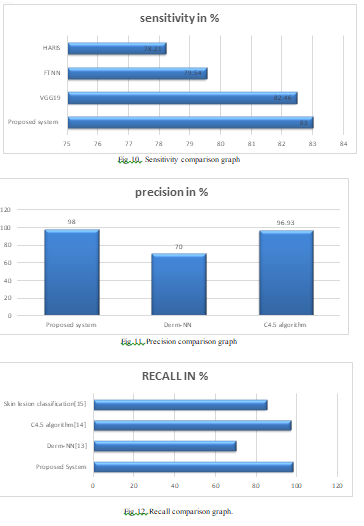

I. Comparative analysis

Fig 9. Graph Showing Accuracy Comparison Analysis.

J. Performance Metrics

IV. RESULT AND DISCUSSION

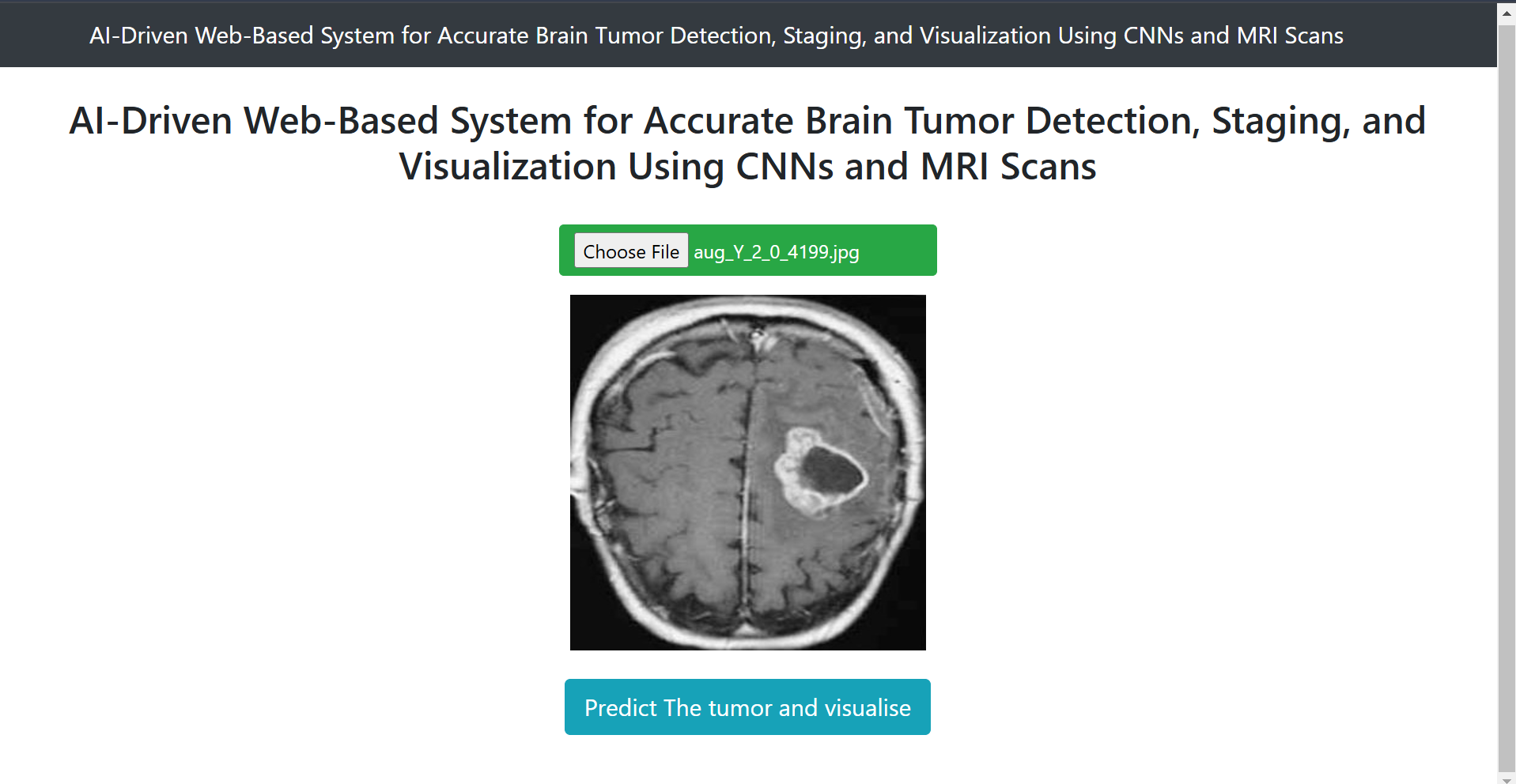

Fig 15. User Interface of website.

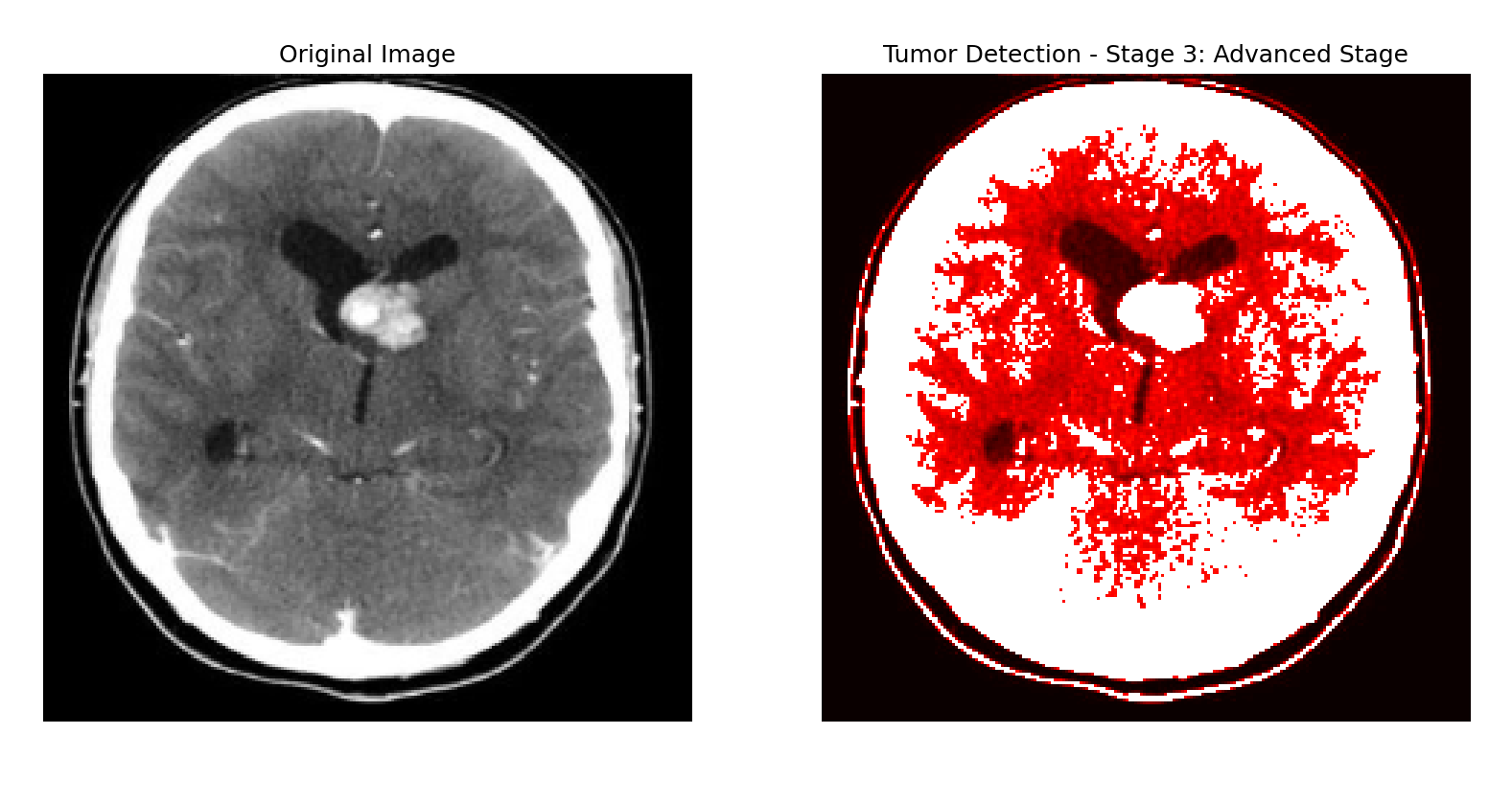

Fig 16 .Output indicating the presence of a brain tumor, including the tumor stage and corresponding visualization

Fig 16 .Output indicating the presence of a brain tumor, including the tumor stage and corresponding visualization

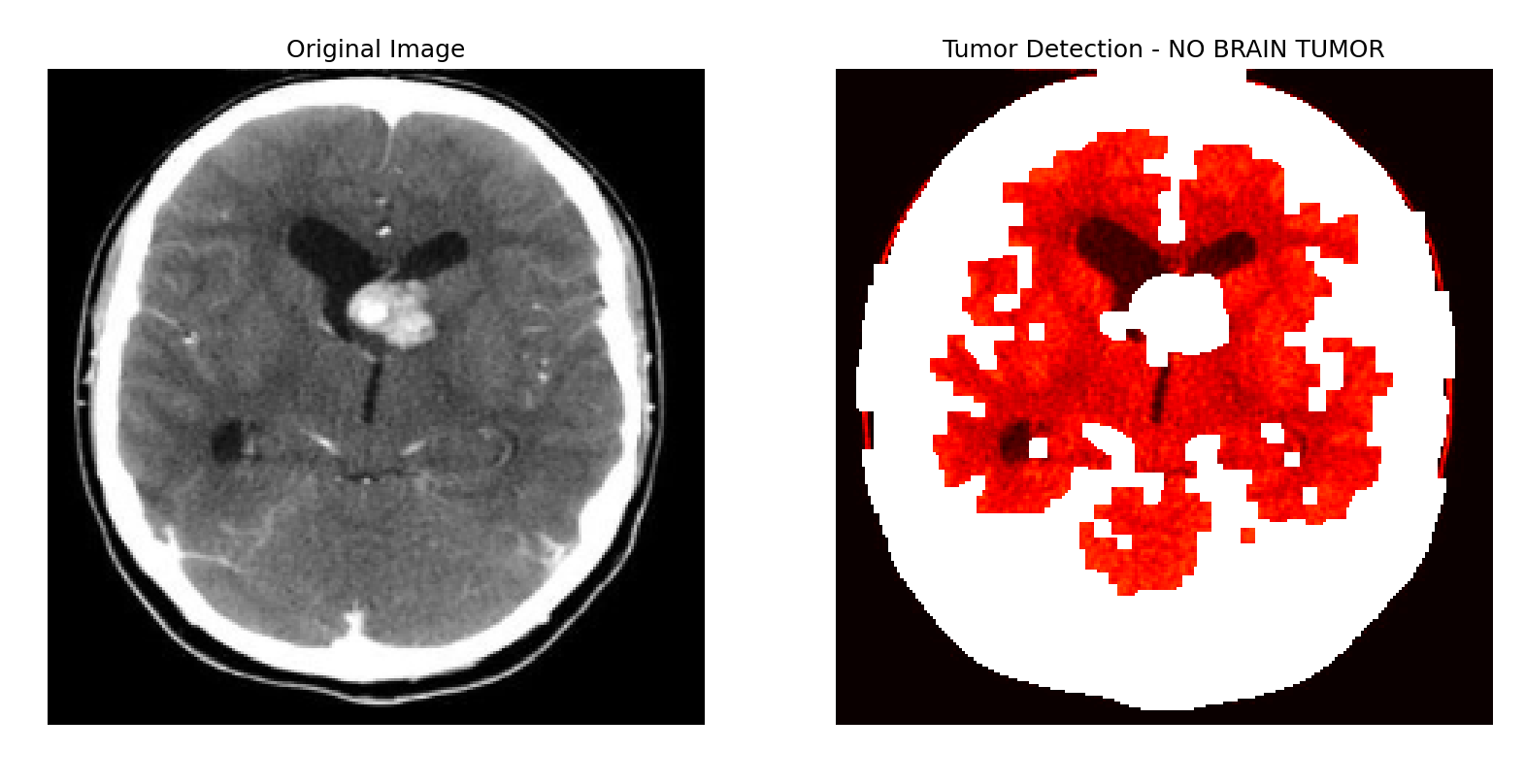

Fig 17. Output indicating the absence of a brain tumor.

Fig 17. Output indicating the absence of a brain tumor.

Conclusion

This work effectively outlines the usage of CNNs in development of an automatic web application that detects, visualizes, and stages brain tumors. In this case, the integration of detection and visualization is vital not just to identify alone the tumors but also this comes with the knowledge of the size, shape, and stage of the tumors which is clinically helpful. Non-invasive procedures are utilized and combined with MRI data its overall compliance with the objective of decreasing patient risk but still achieving high diagnostic sensitivity. Evaluation and performance testing support experimental results which are consistent with the system high efficiency and effectivity with classification accuracy up to 98%, therefore the chosen CNN-based approach is very effective. The visualization capabilities are incorporated to allow clinicians visualization insights which are understandable and a functionality to the diagnostic model. Additionally, the training of the system across multiple formats guarantees practical usability of the system in the actual healthcare setting. To that end, this work demonstrates how AI can be applied in medical imaging and opens a new chapter in the employment of such technologies in the sphere of healthcare. Further development includes the incorporation of multimodal imaging data, the improvement of the real time data processing and moreover, large scale clinical studies that will demonstrate the effectiveness of the system in clinical applications. The benefits of this innovation are that diagnostic evaluations in the neuro-oncology area may be more efficient, accurate, and uniform, and, therefore, patient care results should be better.

References

[1] Litjens, G., Kooi, T., Bejnordi, B. E., et al. (2017). A survey on deep learning in medical image analysis. Medical Image Analysis, 42, 60-88. [2] Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. arXiv preprint arXiv:1505.04597 [3] Pereira, S., Pinto, A., Alves, V., & Silva, C. A. (2016). Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Transactions on Medical Imaging, 35(5), 1240-1251. [4] Shen, D., Wu, G., & Suk, H. I. (2017). Deep learning in medical image analysis. Annual Review of Biomedical Engineering, 19, 221-248. [5] Esteva, A., Kuprel, B., Novoa, R. A., et al. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115-118. [6] Kamraoui, F., & Bouzid, Z. (2020). Brain tumor classification using convolutional neural networks. International Journal of Online and Biomedical Engineering (iJOE), 16(3), 40-55. [7] Chang, K., Beers, A. L., Bai, H. X., et al. (2018). Automatic assessment of glioma burden using deep learning on clinical multimodal MRI. American Journal of Neuroradiology, 39(7), 1207-1213. [8] Skourt, B. A., El Hassani, A., & Majda, A. (2018). Lung CT image segmentation using deep learning methods: U-Net versus SegNet. Signal, Image and Video Processing, 12(6), 1071-1078. [9] Afshar, P., Plataniotis, K. N., & Mohammadi, A. (2020). Capsule networks for brain tumor classification based on MRI images and coarse tumor boundaries. Computers in Biology and Medicine, 121, 103758. [10] Hossain, M., & Hossain, M. M. (2019). A deep learning approach for brain tumor classification using convolutional neural networks. International Conference on Computing, Electronics & Communications Engineering (iCCECE), 249-254. [11] Talo, M., Yildirim, O., Baloglu, U. B., et al. (2019). Convolutional neural networks for multi-class brain disease detection using MRI images. Computerized Medical Imaging and Graphics, 78, 101673. [12] Sharma, P., & Mishra, A. (2019). MRI-based brain tumor classification using deep learning and Gabor features. Journal of Imaging Science and Technology, 63(5), 50403. [13] Liu, Y., Li, Y., Guo, Y., et al. (2020). A survey of CNN-based medical image segmentation for brain disease diagnosis. IEEE Transactions on Neural Networks and Learning Systems, 31(8), 2673-2687. [14] Zhao, W., Wang, Z., Jia, W., et al. (2019). 3D deep learning from CT scans predicts tumor invasiveness of subcentimeter pulmonary adenocarcinomas. Cancer Research, 79(17), 3947-3958. [15] Ghaffari, M., Sowmya, A., & Oliver, R. (2020). Deep learning for brain tumor classification. Sensors, 20(17), 4889.

Copyright

Copyright © 2024 Aniket Khandave, Gunjan Kukreja, Amol Patil, Rutuja Sonwane, Komal Patankar, Aditya Chorghade. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65491

Publish Date : 2024-11-24

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online